An Overview of Guided Policy Search

Relation to Model-free Policy Optimization Algorithms

Model-free policy optimization algorithms were described here.

- Direct Policy Search: learning complex, nonlinear policies with standard policy gradient methods

- Require a huge number of samples and iterations

- can be disastrously prone to poor local optimal

Guided Policy Search (GPS)

- Use

Differential Dynamic Programming (DDP)to generate “guiding samples”: assist the policy search by exploringhigh-reward regions. - An

importance sampledvariant of thelikehood ratio estimatoris used to incorporate these guiding samples directly into the policy search.

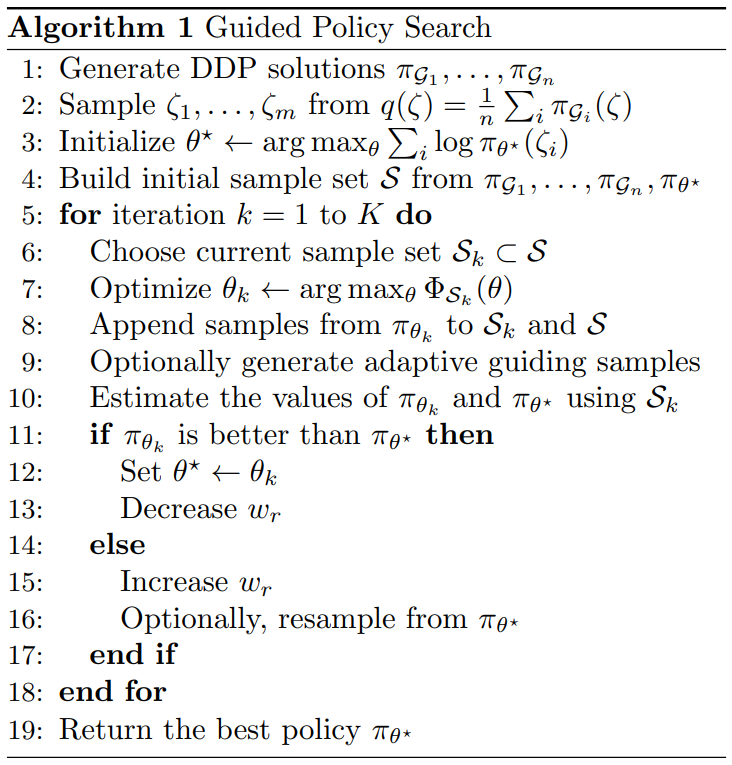

- GPS Algorithm Pseudocode