Robust Adversarial Reinforcement Learning

Motivation

How can we model uncertainties and learn a policy robust to all uncertainties?

How can we model the gap between simulations and real-world?

Inspired from \(H_\infty\) control methods, both modeling errors and differences in training and test scenarios can be viewed as extra forces or disturbances in the system.

Contributions

- Propose the idea of modeling uncertainties via an adversarial agent.

- Train a pair of agents, a protagonist and an adversary, where the protagonist learns to fulfil the original task goals while being robust to the disruptions generated by its adversary.

- The proposed algorithm is robust to model initializations, modeling errors and uncertainties.

Algorithm

- state: \(s_t\)

- action: \(a_t^1 \sim \mu(s_t)\) and \(a_t^2 \sim \nu(s_t)\)

- next state: \(s_{s+1} = P(s_t,a_t^1,a_t^2)\)

- reward: \(r_t = r(s_t,a_t^1,a_t^2)\), when \(r_t^1 = r_t\), while adversary gets a reward \(r_t^2 = -r_t\)

- one step of MDP: \((s_t, a_t^1, a_t^2, r_t^1, r_t^2, s_{t+1})\)

Maximize the following reward function:

\[R^1 = \mathbb{E}_{s_0 \sim \rho, a^1 \sim \mu(s), a^2 \sim \nu(s)}\left[ \sum_{t=0}^{T-1} r_1(s,a^1,a^2) \right].\]Thus,

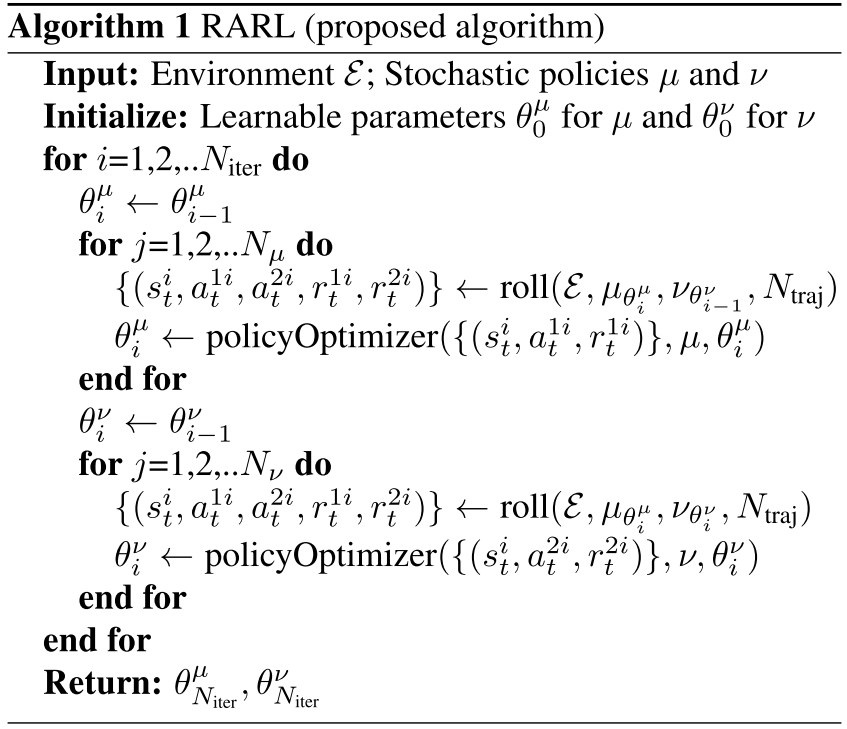

\[R^{1*} = \min_\nu \max_\mu R^1(\mu, \nu) = \max_\mu \min_\nu R^1(\mu, \nu).\]The algorithm optimizes both of the agents using the alternating procedure:

- Learn the protagonist’s policy while holding the adversary’s policy fixed.

- The protagonist’s policy is held constant and the adversary’s policy is learned.

- Algorithm of RARL