A Survey of UAV Simulation With Reinforcement Learning

Simulation is an invaluable tool for the robotics researcher. In allows developing and testing algorithms in a safe and inexpensive manner, without having to worry about the time-consuming and expensive process of dealing with real-world hardware.

- They allow engineers to

identify errors earlyin the develpoment process. - Simulation systems provide not only

massive amounts of data, but also the labels required for training algorithms. - Provide a safe environment for learning from experience useful for RL methods.

The ideal simulator has three main characteristics:

Fast, to collect a large amount of data with limited time and compute, such as Mujoco.Physically-accurate, to represent the dynamics of the real world with high-fidelity.Photo-realistic, to minimize the discrepancy between simulated and real-world sensors’ observations.

PX4 Simulation Doc

AirSim

Air Learning

- Code

- Python

- State: Image, UAV state

- Action: Forward, Left, Right, Back

GymFC

- Code

- Python

- State: rotor speed, angular velocity error

- Action: control signals of each motor

Ethz reinmav-gym

- Code

- Python

- State: Position, quaternion, velocity

- Action: angular velocity, thrust

RAI

- Code

- C++

- State: Rotation Matrix, position, linear velocity, angular velocity

- Action: rotor thrusts

Drone Racing Simulators

- Velocidrone: https://www.velocidrone.com/

- The Drone Racing League (DRL): https://thedroneracingleague.com/

- Liftoff: https://www.liftoff-game.com/

- Unity: https://unity.com/

PaddlePaddle/RLSchool

- Code

- DDPG for UAV velocity control

- PARL-PPO for UAV control

- State: sensor measurements, flighting state and task related state.

- Action: voltage value of four propeller motors, each value is in range \([0.1,15.0]\).

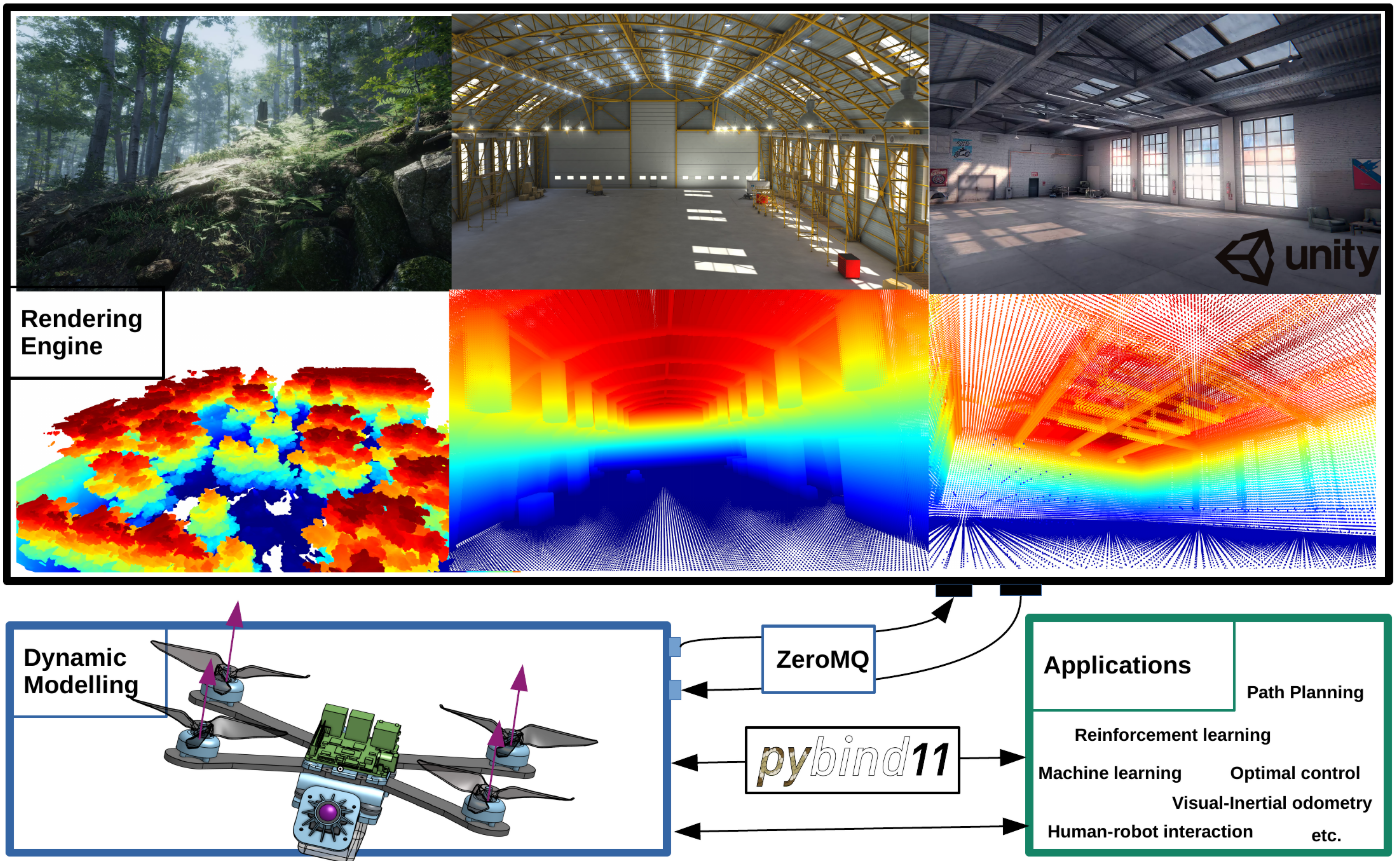

Flightmare

- https://uzh-rpg.github.io/flightmare/

- Code

- Two main components (decoupled and run independently):

- A configurable rendering engine built on Unity, up to

230Hzfor rendering block. - A flexible physics engine for dynamics simulation, up to

200000Hzfor dynamics block.

- A configurable rendering engine built on Unity, up to

- Trade-off between

accuracy and speedby the end-users. - The interface between the rendering engine and the quadrotor dynamics is implemented using high-performance asynchronous messaging library ZeroMQ.

- Multi-modal sensor suite:

- Visual: RGB, depth, semantic segmentation.

- IMU.

- 3D point-cloud of the scene.

API for RL, use the python wrapper to implement OpenAI-Gym style interface for RL tasks.- Interface for

multi-agentsimulation, which can simulate hundreds of quadrotors in parallel. - Integrate with a virtual-reality headset for interaction with the environment.

- Used for the following quadrotor tasks:

- quadrotor

control policy learning. - quadrotor

path-planningin a complex 3D environment.

- quadrotor

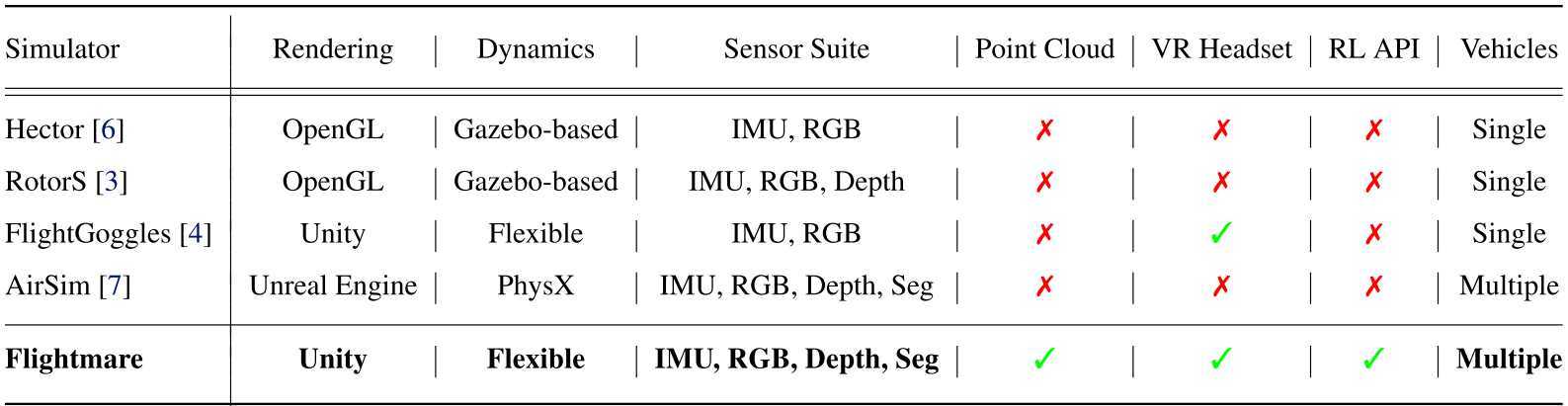

RotorS VS Hector VS AirSim VS CARLA VS FlightGoggles VS Flightmare

- RotorS

- built on Gazebo with ROS.

- provides several quadrotors such as AscTec Hummingbird, Pelican, and Firefly.

- used for path-planning, mapping, exploration, etc.

- Gazebo has

limited rendering capabilitiesand is not designed for efficient parallel dynamics simulation.

- Hector

- built on Gazebo with ROS.

- used for autonomous mapping and navigation with rescue robots.

- AirSim

Photo-realisticsimulator built on Unreal Engine.- limited simulation speed,

difficult to apply it to model-free RL tasks(e.g. training an end-to-end control policy for quadrotor stabilization or flying through a fast moving gate).

- CARLA

Photo-realisticsimulator built on Unreal Engine.- mainly made for

autonomous drivingresearch and only provides dynamics of ground vehicles.

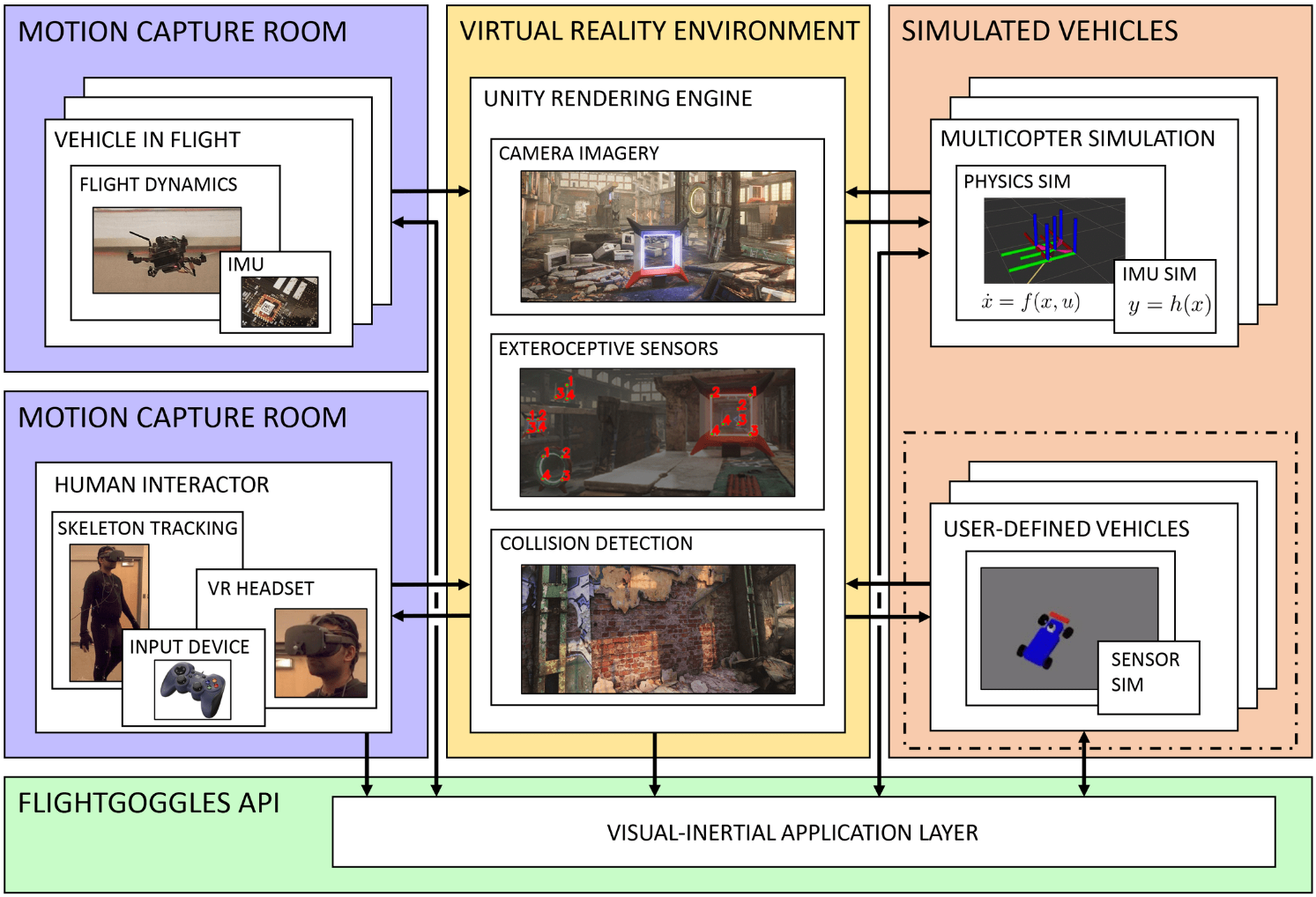

- FlightGoggles

- photo-realistic sensor simulator for

perception-driven robotic vehicles. - exteroceptive sensors:

- RGB-D cameras.

- distortion-free.

- camera projection model with optional motion blur, lens dirt, auto-exposure, and bloom.

- parameters can be changed via API using ROS param or LCM config.

- time-of-flight distance sensors.

- a downward-facing single-point range finder for altitude estimation.

- infrared radiation (IR) beacon sensors.

- provide image-space measurements of IR beacons in the camera’s field of view.

- the beacons can be placed at static locations or on the moving objects.

- RGB-D cameras.

- two separate components (modular architecture):

- a photo-realistic rendering engine built on Unity.

utilize position and orientation information of vehicleto simulate camera imagery and exteroceptive sensors, and to detect collisions (using polygon colliders).- dynamic elements, such as moving obstacles, lights, vehicles, and human actors can be added.

- a quadrotor dynamics simulation implemented in C++.

- vehicle state is updated at

960Hz.

- vehicle state is updated at

- a photo-realistic rendering engine built on Unity.

vehicle-in-the-loop simulation(use motion capture system).- circumventing the need to estimate complex and hard-to-model interactions such as

aerodynamics, motor mechanics, battery electrochemistry, and behavior of other agents. - acquire the

pose of the vehiclein real time. real dynamics,real inertial sensing.- can be seen as an extension of customary

hardware-in-the-loopconfigurations.

- circumventing the need to estimate complex and hard-to-model interactions such as

- API provide:

- dynanics states.

- control inputs.

- sensor outputs.

- Message interface:

- ROS.

- LCM.

- useful for rendering camera images given trajectories and inertial measurements from flying vehicles in real-world.

- decoupling the dynamics modelling from the photo-realistic rendering engine.

- Applications:

- visual inertial navigation research for fast and agile vehicles:

- Visual inertial odometry (VIO) to estimate the vehicle state.

- change environment and camera parameters and thereby enables us to quickly verify VIO performance over a multitude of scenarios.

- human-vehicle interaction.

- active sensor selection.

- multi-agent systems.

- AlphaPilot challenge:

- an autonomous drone racing challenge.

- test the autonomous guidence, navigation, and control capability in a realistic simulation environment.

- sensors data provide (via ROS API):

- (stereo) cameras.

- IMU.

- downward-facing time-of-flight range sensor.

- infrared gate beacons.

- autonomous systems obtains

sensor measurementsandprovide collective thrust and attitude rateinputs to the quadrotor’slow-level rate mode controller.- methods:

end-to-end learning basedmethod.traditional pipelines: estimation, planning, and control.- estimation: Kalman filter, ROVIO, VINS-Mono, etc.

- planning: visual servo using infrared beacons, polynomial trajectory planning, manually-defined waypoints, sampling-based techniques for building trajectory libraries.

- control: linear control, model predictive control, geometric and backstepping control.

- methods:

- environment: FlightGoggles Abandoned Factory.

- the exact gate locations were subject to random unknown perturbations.

- Score = 10 X gates - time.

- visual inertial navigation research for fast and agile vehicles:

- photo-realistic sensor simulator for

Rendering Engine

- built with Unity.

- various high-quality 3D environments: warehouse, nature forest, etc.

- users can add environment perturbation, such as wind.

- A new environment or asset can easily be created or directly purchased from the Unity Asset Store.

- Sensors:

- RGB cameras with ground-truth depth and semantic segmentation.

- users can change the

camera intrinsicssuch as field of view, focal length, and lens distortion. snesor noise: also can simulate physical effects on the camera includingmotion blur, lens dirt, and bloom.

- users can change the

- Rangefinders.

- Collision detection between agents and its surroundings.

- RGB cameras with ground-truth depth and semantic segmentation.

- Provide a graphical user interface (GUI) as well as a C++ API for users to extract ground-truth point clouds of the environment.

Dynamic Modelling

- A gazebo-based quadrotor dynamics,

slower but more realistic.- basic model: noise-free.

- more advanced rigid-body dynamics: including friction and rotor drag.

- Real-world dynamics, offers the interface to combine real-world dynamics with photo-realistic rendering.

- inertial sensing and motor encoders are directly depend on the physics model.

- A parallelized implementation of classical quadrotor dynamics, useful for large-scale

RL applications. - control modes:

body-rate mode: implement a low-level controller for tracking thedesired body rates.rotor-thrusts mode: low-level controller generatesdesired rotor thrustsfor each motor.

Learning a Policy for Quadrotor Control

Three tasks:

- stabilize a quadrotor from randomly initialized poses.

- state: \((p, \theta, v)\).

- action: \((c, \omega_x, \omega_y, \omega_z)\).

- stabilize a quadrotor from randomly initialized poses under a single

motor failure.- state: \((p, \theta, v, \omega)\).

- action: \((f_1, f_2, f_3)\).

- control a quadrotor to fly through static gates as fast as possible.

- state: \((p, \theta, v, \omega, p_{gate}, \theta_{gate})\).

- action: \((f_1, f_2, f_3, f_4)\).

Method:

- Train neural network controllers for each task using

PPOalgorithm and OpenAIstable-baselinesimplementation. - Simulate 100 quadrotors in parallel for trajectory sampling and collect in total 25 million time-steps for each task.

Point Cloud and Path Planning

- Provide an interface to export the 3D information of the full environment as point cloud with any desired resolution.

- illustrate a section of the complex nature forest environment, with a resolution of 0.1m and contains detailed 3D structure information of the forest.

- compute the shortest collision-free path between two points, from point

Ato pointB. - run the OMPL on the point-cloud extracted from the forest with a default solver for path-planning.

Other Applications

- Virtual reality and safe human-robot interaction.

- Can be used to study the implications of large scale-multi robot systems.

- Can be extremely useful for testing odometry and SLAM systems.

- Can also be used to learn deep sensorimotor policies via imitation learning.